Experiments

Room Modes: Experiment 1

After my discussion with Tim Gulsrud about acoustics and resonance I became incredibly fascinated with room modes. In every room there is a "resonant" frequency where the sound wave will create a standing waves. The wave will form points of resonance and cancelation throughout the space. A person can walk around and hear how the sound builds and dies. It's a very visceral feeling. I remember experiencing the experiment in my physics of sound class in undergrad. I wanted to experiment with it again. Feel how it felt and explore some potential artistic implications of room modes.

Over at Signal-to-Noise Media Labs, a creative collective of artists and scientist, I worked with Jeff Merkel to find the resonant frequency of the room we were in. The equation is quite simple. The fundamental frequency, (f), is equal to the speed of sound, (v), divided by 2 times the length of your room, (L). This is the same equation to find the fundamental frequency of a closed tube. To find the harmonics you simply multiply the fundamental by increasing integers.

Once we had calculated the fundamental frequency of the room, as well as the corresponding harmonics, we played an individual sine wave at these frequencies through a subwoofer placed near the edge of the room. The feeling of the modes was powerful and created a very visceral feeling. I was eager to explore further what potentials there were for working with the physics of a space and how I could potentially even create a composition based on the relationship of sound to space.

Room Modes: Experiment 2

To continue with my latest obsession over room modes I have been thinking up ideas for how you could potentially visualize the modes in a space. I was wondering if there would potentially be enough air displacement with a large subwoofer that it could potentially move a substance such as fog from a fog machine into the peaks and troughs of the wave. I borrowed a fog machine to test this thought. Within a few seconds of turning the fog machine on the room filled with fog.

The experiment was inconclusive as fog tends to fill the room and makes it hard to observe anything more than the fog in front of your face. I would like to further experiment with other mediums such as with dry ice or bubbles.

Brainwaves

Through my interviews with the varied psychologists I had connected with, I was becoming more and more interested in what was going on in our brains when we connected or ‘resonated’ with something or someone. I thought it would be interesting to play with a brain wave scanner to explore what it might look like to be on the same ‘wavelength’ as someone else. In my discussions with the psychologists I found no one had a specific understanding of why we connect with people the way we do and what is happening in the brain when we connect. What does it look like when you are ‘resonating’?

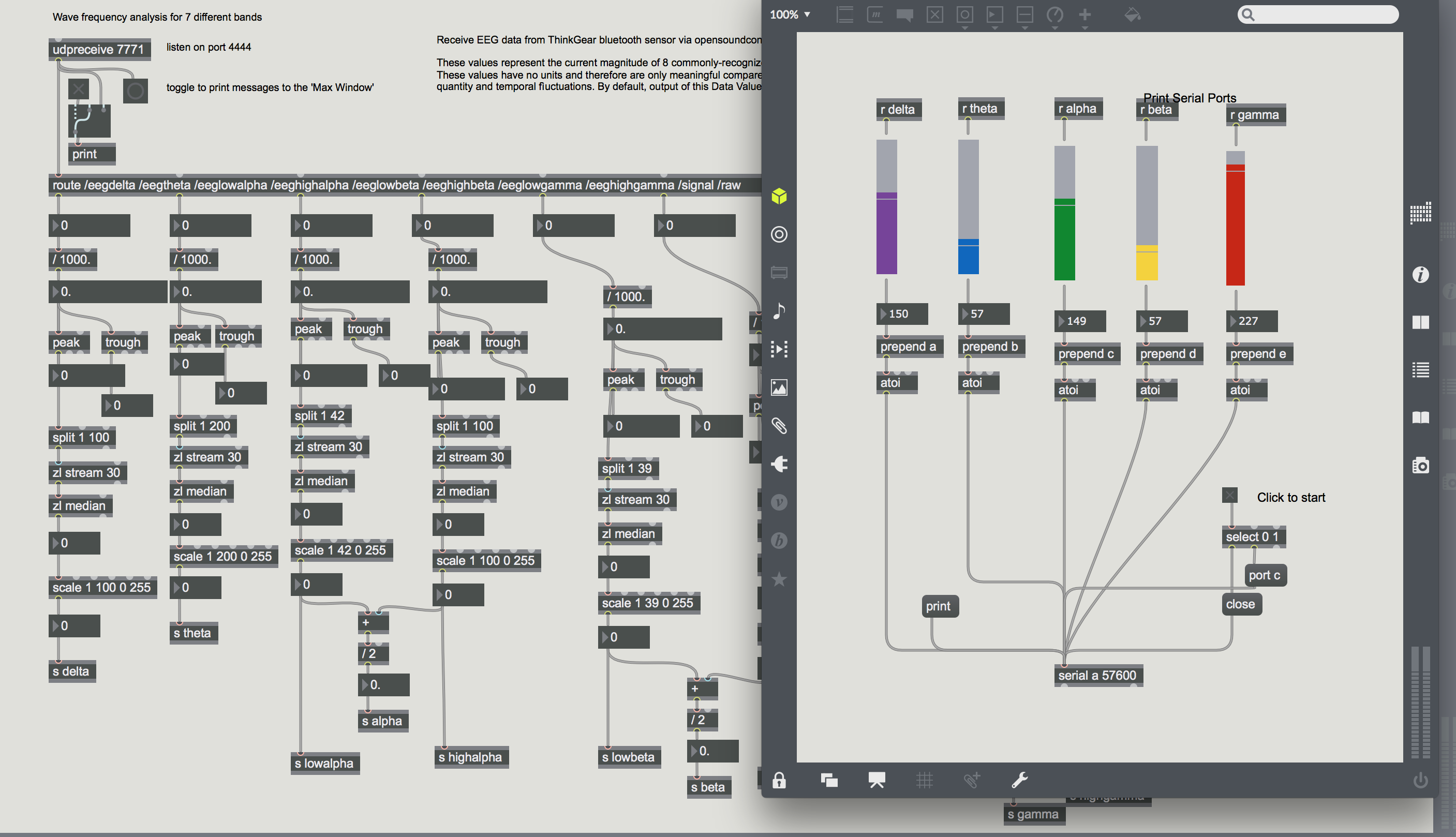

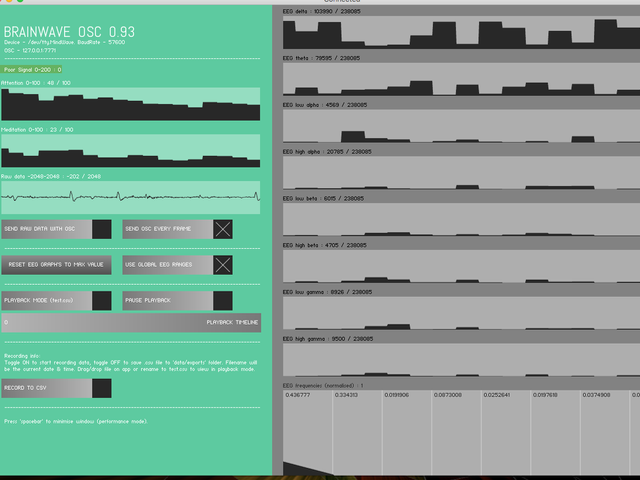

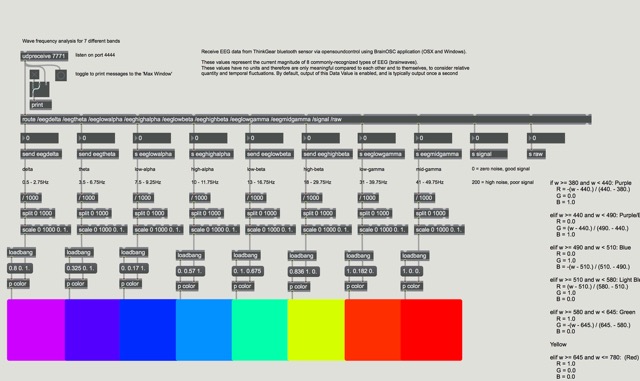

I borrowed a friend’s NeuroSky Mindwave and began in my first attempts to get it working. I found an app called BrainWaveOSC, built by Trent Brooks, that took the data from the NeuroSky Mindwave and translated it into OSC messages. Trent Brooks also had a Max MSP patch that routed all the messages. I installed the drivers for the Mindwave and followed his directions and was soon seeing the brainwave data flow into Max MSP.

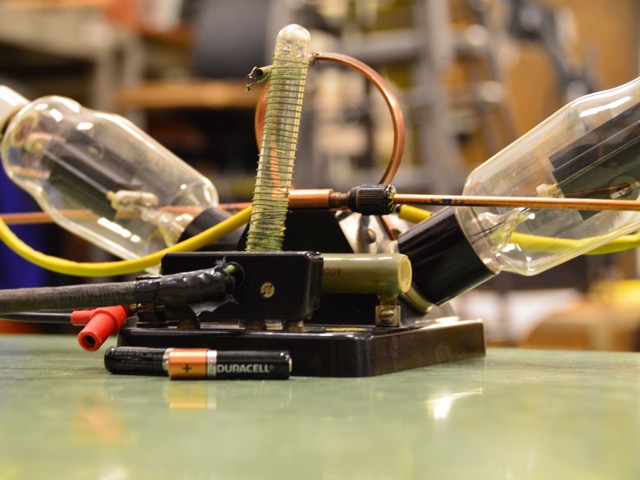

Room Modes: Electromagnetic

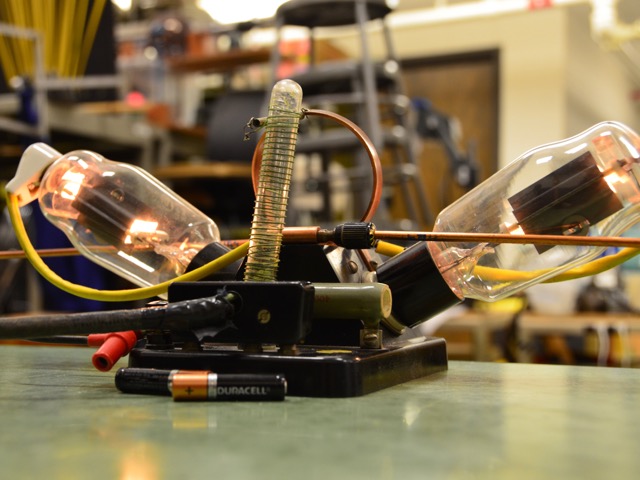

As I was ruminating on ways to visualize room modes I reached out to Michael Thomason. Michael Thomason is responsible for taking care of and creating demos for the physics lab on campus. I was interested to hear his take on how to potentially visualize sound waves in a space. He started thinking and then mentioned that you could visualize electromagnetic waves in a 3-Dimensional environment by using a radio transceiver to emit a radio wave at a certain frequency. You can attach a flashlight bulb to an antenna that is about the same length as the radio wave and when the lightbulb is placed in a point of constructive interference in the room it will light up. This experiment is also affected by the horizontal and vertical plane. By changing the horizontal and vertical direction of the antenna it affects the turning ‘on’ and ‘off’ of the light bulb as well. This was an amazing demonstration of physics and a concept definitely worth further research.

Brainwaves Jam

I wanted to visualize the brain wave data in some way so that I could explore the relationship of brainwaves to action in a way that was more accessible to observers and myself. To visualize the data, I mapped the brainwave frequencies to the visible light spectrum and connected a color panel to each brainwave in the Max MSP patch. The more present that brainwave was the more intense the color associated with it was. I noticed I was seeing a large amount of delta waves active in the data stream. This didn’t make a lot of sense as the delta waves are mostly associated with deep sleep. I figured out that the global EEG range field in the BrainWave OSC app needed to be turned on in order to get a more evenly distributed reading.

The other frustrating element of the app was that the data values for each brainwave were incredibly different and I was unable to find the max and min of the data points. I ended up having to guess on what numbers to use for scaling the data into a usable format for my needs. This made the color mapping more crude then I would have liked.

Once I had finished building and mapping the colors to the brainwaves I put on the brainwave scanner and began to play piano. I felt the color representation was an interesting way to have a ‘glimpse’ into the way my brainwaves were responding as I was ‘resonating’ with the music I was playing. I began to think of ways to incorporate this visualization into my performance.

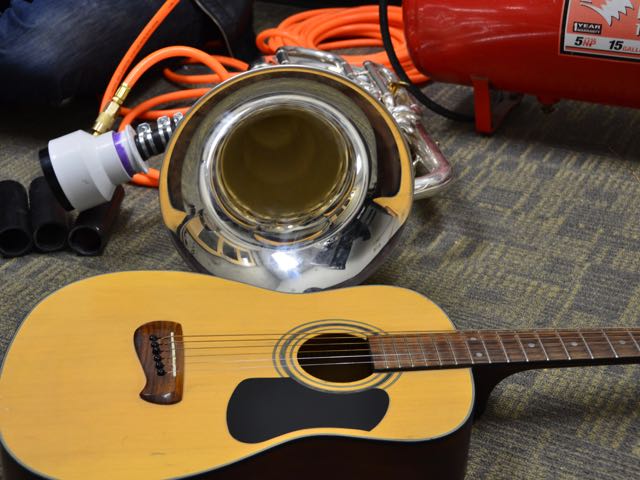

Sympathetic Resonance

I had recently been introduced to a composer in CU’s music department, Zachary Patten. He had developed a device that allowed him to play brass instruments using compressed air. I found his aesthetics and artistic motivations to be incredibly interesting and inspiring. I asked if he wanted to compose a piece for my production based on concepts of sympathetic resonance. I had been inspired by this form of resonance while talking with Michael Theodore, a professor in the music department at ATLAS. Sympathetic resonance is a phenomenon in which one vibratory body can cause another of similar harmonic likeness to vibrate. To explore how we might utilize this physical process we explored using blasts of different tones from the brass instruments to vibrate the strings of a guitar. We also used a speaker placed underneath a drumhead to vibrate the body of the drum. While you could hear and feel the affect of the vibrations on the guitar strings and drumhead the effect was subtle. We would need to find a way to amplify the effect in some way if we wanted an audience to connect with the idea.

Spatial Piano Recording

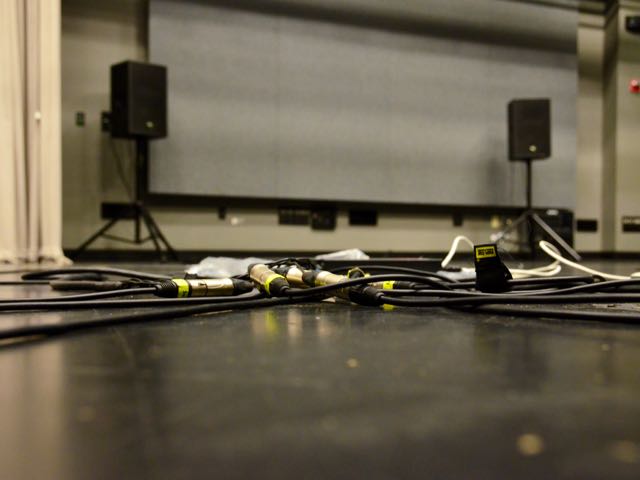

As an audio engineer, I have been experimenting and working with creating for spatial multichannel environments for awhile. I knew that I wanted to incorporate a spatial audio component into my final production. I went into the Black Box Theater, with Leslie Gaston, to test out some ideas on different miking techniques to be integrated into the final performance. I wanted to explore the relationship of sound between a piano that is physically in the space and one that has been projected around the audience.

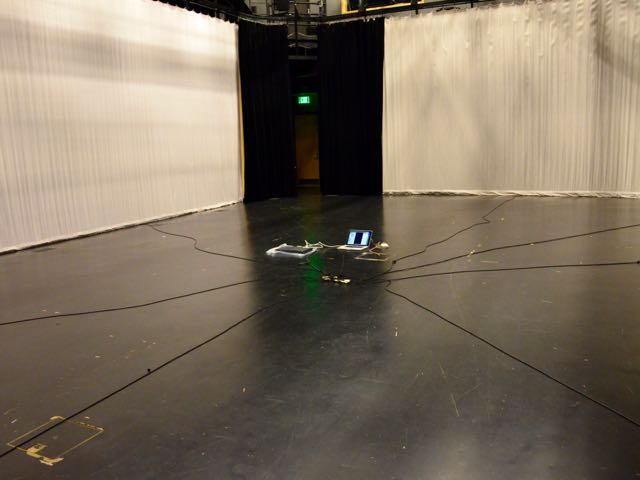

The setup process took a little more time then I had hoped. We spent a lot of time trying to figure out the best way to create a workflow in the space. The speakers were not already set up so we had to first place all of them around the space in a circle. We used an 8 channel Presonus Firestudio to interface my computer to all of the speakers. I used a Max MSP patch I had constructed in conjunction with Signal-to-Noise labs to do the spatialization. For recording, we used the ProTools setup that the Black Box had. Since we were monitoring from my computer downstairs, but recording on the ProTools system, which was upstairs, we had to figure out the best way to get the audio files to me for playback.

We explored our options for attempting to network between computers to make the file transferring process a bit smoother. Initially we attempted to setup Dante Virtual controller to send audio over IP but the Black Box computer and Protools rig were too old and wouldn't recognize the install files. We attempted to do a file share over Ethernet between my computer and the Protools computer, but were struggling to make the connection so we finally settled on transferring the files from the Protools computer to another computer in the control room that had Airdrop and allowed us to use that to send files from that computer to mine wirelessly. I was then able to spatialize them and hear what the recordings were sounding like. The whole process was a bit cumbersome and made me desire a space that was built for this kind of experimentation.

Once we had everything set up we dove into exploring some different microphone placements. We were limited to 12 microphones at one time due to the number of XLR cables available. We were also limited in microphone choices, but we made due with what was available to us. To begin we arranged four Sennheiser 421s in a modified Hamasaki Square around the piano. We used two Crown PCC160 boundary microphones on the metal plates that spanned below the piano connecting the legs. Finally, we used a DPA 5100 Mobile 5.1 Surround Sound Microphone placed underneath the piano at the base with the center facing towards the front of the piano. This gave us 12 channels which we recorded to the Black Box's Protools setup.

We only had time to get through one setup, but it still gave me some good ideas for further research. Following the session, I have been experimenting with the spatialization of the microphone setup in my Max patch. I have experimented with different ways of grouping the different channels of the recoding and controlling their motions in the spatial environment. Next time I feel I understand the workflow better and can be more prepared and efficient with time. I would like to be able to do the recording with as many different miking setups at the same time as possible so I can use them in different ways in the final mix. I also have some better ideas about some of the notes and piano expressions that I want to capture, ie) I think it would be good to capture more individual notes and runs.

Black Box Tests

I went into the Black again to spend some time testing out and experimenting with ideas. I spent a decent amount of time setting up the 8-speaker array again, as well as pulling out the curtains to where I would want them for the performance. Once everything was set up I started playing back some of the tracks I had recorded a few days previously. I really liked the overall sound of the tracks but realized that having some moments of individual notes would allow me to do some interesting things with moving the melody around the space. The other thing I tested was the acoustical transparency of the scrim. I was happy to find that it didn't seem to affect the sound and that I should be able to wrap the scrim all the way around the space to projection map on.

I also experimented with taking the recordings of the interviews I had done and assigning each of them to their own speaker. When standing in the middle of the room all you heard was the cacophony of conversations, but at moments elements of the individual conversations would grab your attention. If you moved closer to any one speaker you were pulled into that discussion and exploration of resonance. Each individual interview seemed like a work of art. I enjoyed the prospect of creating a gallery with conversations hung on the walls.

Recording Sympathetic Resonance

For one of the movements of the performance I tasked Zach Patten with composing a piece based on Sympathetic Resonance. I challenged him to only use sounds created and inspired by sympathetic resonance. To collect sounds, we organized a recording session where we explored a few different ways to generate sound using this concept. Since one of the most basic physics demonstrations of sympathetic resonance involves two tuning forks attached to resonance boxes, when one is struck the other will start to vibrate too, we ended up borrowing some tuning forks from the physics department and recorded 33 different tuning forks.

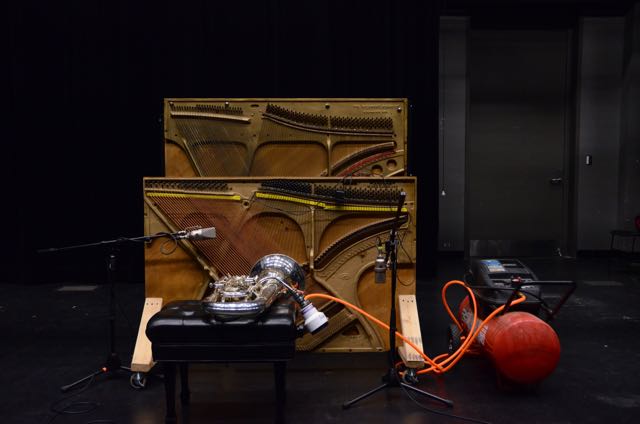

Zach had also developed a way to play brass instruments using compressed air and had recently acquired a couple piano harps. We ended up setting up the piano harps and used five microphones to record them as they were being blasted with a Tuba and a Baritone.

My new favorite instrument is Marbles on Drum. We set up four Sennheiser 421’s (wouldn’t recommend these for this kind of recording as you need a lot of gain, but they were the only microphone we had four of) to record an improvisation of me placing marbles on the drum while finding different resonating frequencies of the drum head to vibrate them.

Brainwaves Dress

To follow up on my earlier experiments with visualizing brainwaves I decided to work with Emily Daub to help design and sew LEDS onto a dress that would respond to my brainwaves.

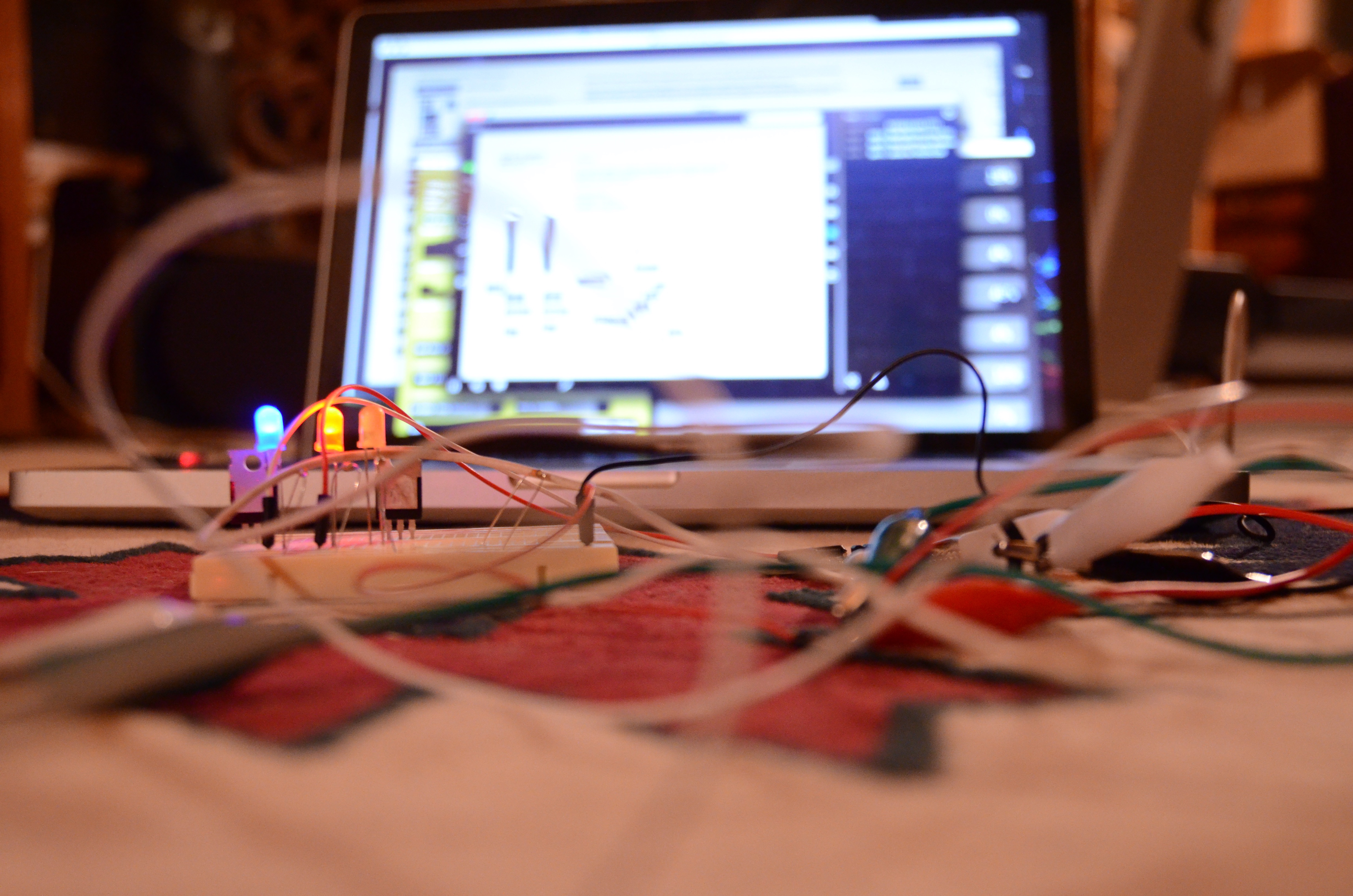

On the technological side, I used the initial Max MSP patch I had modified to send values to a Lilypad that was controlling the brightness of different colored lights. The first step after I had the brainwave data coming into Max MSP was to send the data from Max MSP to an Arduino Uno and use it to control the intensity of a basic LED light. I started with attempting to get one LED working. Once I had code that allowed me to change the intensity of the light, I then attempted to connect it to Max MSP. I used a serial object in Max to output serial data to be read by the Arduino. For each of the five main brainwaves I scaled the incoming values between 0 and 255 and then used a slider to help simulate information coming in from the brainwave. Once I was able to control one LED light with the slider in Max MSP I then hooked up the rest of the brainwaves and had to devise a way to send multiple streams of data through the serial stream to be parsed out according to their relative brainwave. I did this by attaching a letter value to the beginning of each string and then parsed out the brightness values based on the corresponding letter value. Once I had five different LEDs brightness values being controlled by the Max MSP patch, I then moved the code to the Lilypad and connected that to an Xbee Lilypad breakout board so that I could use the Xbees to communicate wirelessly between the Max MSP patch and the LEDs.

At the end of the process I was sending data over Bluetooth from the brainwave scanner placed on my head to my computer which was being interpreted by the Openframeworks application built by Trent Brooks and sent via OSC messages to Max MSP where all of the data was being received and processed before being sent between the USB Xbee breakout plugged into my computer and the Lilypad Xbee breakout connected to a Lilypad that was taking the information and then controlling the brightness of five different colored strands of LEDs, each strand associated to one of five of my brain waves.